Portfolio

Please, keep in mind, this is a list of

major projects

I was involved in.

Also, I did another several backend API projects not listed here (NDA).

Plus, there's a lot of scripts/parsers (love parsers!) and ruby gems I've

created for these 14 years

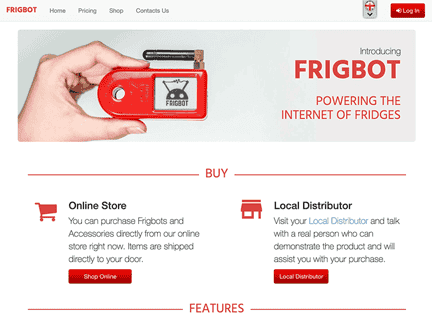

Frigbot (IoT)

Legacy Rails code refactoring, telecom integrations, high-load IoT cloud built

Higgins MD 420

Refactoring and developing website for medical marijuana evaluation clinic